Assistive Tech for

Specially-Abled Individuals

Create AI speech tools, next-gen prosthetics and apps that open new doors to independence.

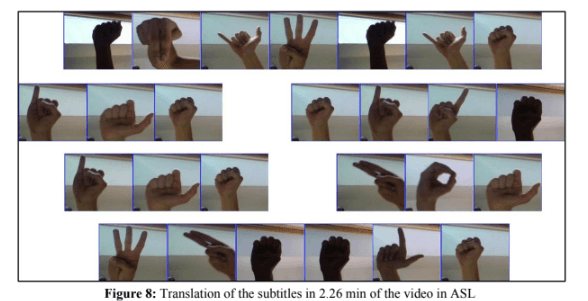

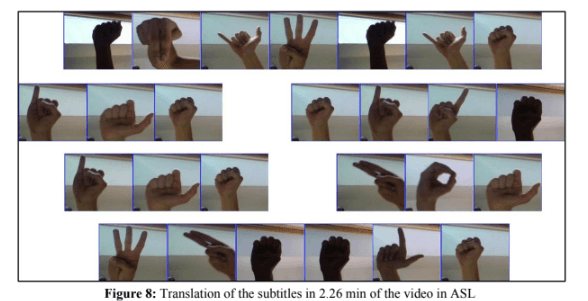

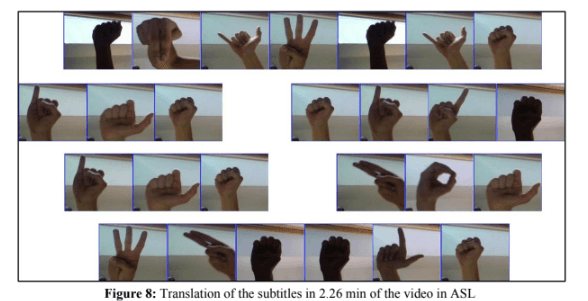

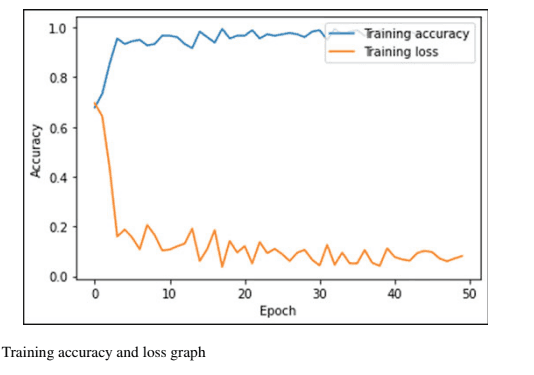

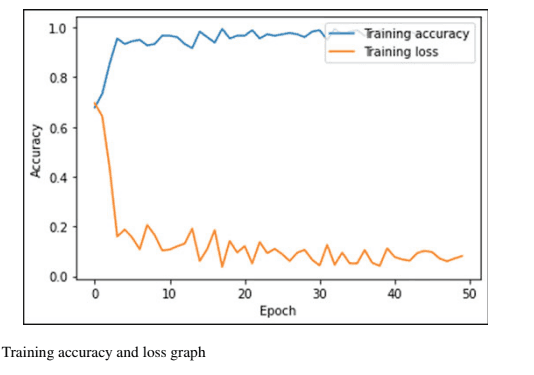

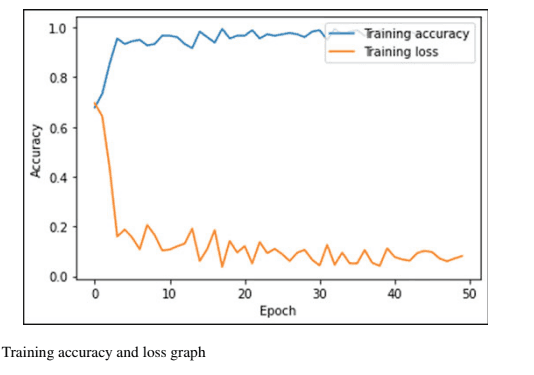

Converting Youtube Video to American Sign Language Translation Using Convolution Neural Network and Video Processing

Sign language is a vital mode of communication for the deaf, comprising around 5% of the global population. Despite the availability of subtitles in various languages, YouTube lacks sign language-based subtitles. This study aims to develop American Sign Language (ASL) subtitles for YouTube videos. The proposed method involves a three-phase framework integrating deep learning-based Convolutional Neural Network (CNN) and image/video processing techniques. A torch-based CNN model achieved high accuracy in training and testing (99.982% and 98% respectively). The study demonstrates the practical application of the integrated method by extracting a random YouTube video.

Converting Youtube Video to American Sign Language Translation Using Convolution Neural Network and Video Processing

Sign language is a vital mode of communication for the deaf, comprising around 5% of the global population. Despite the availability of subtitles in various languages, YouTube lacks sign language-based subtitles. This study aims to develop American Sign Language (ASL) subtitles for YouTube videos. The proposed method involves a three-phase framework integrating deep learning-based Convolutional Neural Network (CNN) and image/video processing techniques. A torch-based CNN model achieved high accuracy in training and testing (99.982% and 98% respectively). The study demonstrates the practical application of the integrated method by extracting a random YouTube video.

Converting Youtube Video to American Sign Language Translation Using Convolution Neural Network and Video Processing

Sign language is a vital mode of communication for the deaf, comprising around 5% of the global population. Despite the availability of subtitles in various languages, YouTube lacks sign language-based subtitles. This study aims to develop American Sign Language (ASL) subtitles for YouTube videos. The proposed method involves a three-phase framework integrating deep learning-based Convolutional Neural Network (CNN) and image/video processing techniques. A torch-based CNN model achieved high accuracy in training and testing (99.982% and 98% respectively). The study demonstrates the practical application of the integrated method by extracting a random YouTube video.

Deep Learning and Computer Vision-Based Attentiveness Tracking for Autistic Students in Online Learning Environments

This project introduces Panacea, a deep-learning software that monitors the attentiveness of autistic students during online classes. Using webcam input, the system analyzes facial expressions, blinking, pupil orientation, and head pose in real time. A machine learning model classifies emotions to refine accuracy, while a probability density function combines outputs from all modules to determine whether a student is “Attentive” or “Non-Attentive,” achieving 97% accuracy. Data is updated every six frames and logged automatically for later review by instructors, enabling better support, personalised intervention, and structured monitoring during virtual learni

Deep Learning and Computer Vision-Based Attentiveness Tracking for Autistic Students in Online Learning Environments

This project introduces Panacea, a deep-learning software that monitors the attentiveness of autistic students during online classes. Using webcam input, the system analyzes facial expressions, blinking, pupil orientation, and head pose in real time. A machine learning model classifies emotions to refine accuracy, while a probability density function combines outputs from all modules to determine whether a student is “Attentive” or “Non-Attentive,” achieving 97% accuracy. Data is updated every six frames and logged automatically for later review by instructors, enabling better support, personalised intervention, and structured monitoring during virtual learni

Deep Learning and Computer Vision-Based Attentiveness Tracking for Autistic Students in Online Learning Environments

This project introduces Panacea, a deep-learning software that monitors the attentiveness of autistic students during online classes. Using webcam input, the system analyzes facial expressions, blinking, pupil orientation, and head pose in real time. A machine learning model classifies emotions to refine accuracy, while a probability density function combines outputs from all modules to determine whether a student is “Attentive” or “Non-Attentive,” achieving 97% accuracy. Data is updated every six frames and logged automatically for later review by instructors, enabling better support, personalised intervention, and structured monitoring during virtual learni

Intelligent Deep-Learning Based App for Music Therapy of Autistic Children

This project presents an intelligent deep-learning application designed to support music therapy for autistic children. Built using Python and Tkinter, the platform creates personalized therapy plans using neural-network–based classification tailored to each child’s medical history, usage patterns, and behavioural trends. The system integrates sound processing, CNN-based music classification, and real-time feedback to adapt sessions dynamically. Cameras and sensors track facial expressions, gestures, and engagement levels, enabling responsive therapeutic interaction. Gamified elements, an AI-curated music library, and multilingual support (English and Hindi) increase accessibility and motivation. Implemented on Raspberry Pi with a child-friendly interface, the application enhances emotional regulation, engagement, and therapy effectiveness for children on the autism spectrum.

Intelligent Deep-Learning Based App for Music Therapy of Autistic Children

This project presents an intelligent deep-learning application designed to support music therapy for autistic children. Built using Python and Tkinter, the platform creates personalized therapy plans using neural-network–based classification tailored to each child’s medical history, usage patterns, and behavioural trends. The system integrates sound processing, CNN-based music classification, and real-time feedback to adapt sessions dynamically. Cameras and sensors track facial expressions, gestures, and engagement levels, enabling responsive therapeutic interaction. Gamified elements, an AI-curated music library, and multilingual support (English and Hindi) increase accessibility and motivation. Implemented on Raspberry Pi with a child-friendly interface, the application enhances emotional regulation, engagement, and therapy effectiveness for children on the autism spectrum.

Intelligent Deep-Learning Based App for Music Therapy of Autistic Children

This project presents an intelligent deep-learning application designed to support music therapy for autistic children. Built using Python and Tkinter, the platform creates personalized therapy plans using neural-network–based classification tailored to each child’s medical history, usage patterns, and behavioural trends. The system integrates sound processing, CNN-based music classification, and real-time feedback to adapt sessions dynamically. Cameras and sensors track facial expressions, gestures, and engagement levels, enabling responsive therapeutic interaction. Gamified elements, an AI-curated music library, and multilingual support (English and Hindi) increase accessibility and motivation. Implemented on Raspberry Pi with a child-friendly interface, the application enhances emotional regulation, engagement, and therapy effectiveness for children on the autism spectrum.

Converting Youtube Video to American Sign Language Translation Using Convolution Neural Network and Video Processing

Sign language is a vital mode of communication for the deaf, comprising around 5% of the global population. Despite the availability of subtitles in various languages, YouTube lacks sign language-based subtitles. This study aims to develop American Sign Language (ASL) subtitles for YouTube videos. The proposed method involves a three-phase framework integrating deep learning-based Convolutional Neural Network (CNN) and image/video processing techniques. A torch-based CNN model achieved high accuracy in training and testing (99.982% and 98% respectively). The study demonstrates the practical application of the integrated method by extracting a random YouTube video.

Converting Youtube Video to American Sign Language Translation Using Convolution Neural Network and Video Processing

Sign language is a vital mode of communication for the deaf, comprising around 5% of the global population. Despite the availability of subtitles in various languages, YouTube lacks sign language-based subtitles. This study aims to develop American Sign Language (ASL) subtitles for YouTube videos. The proposed method involves a three-phase framework integrating deep learning-based Convolutional Neural Network (CNN) and image/video processing techniques. A torch-based CNN model achieved high accuracy in training and testing (99.982% and 98% respectively). The study demonstrates the practical application of the integrated method by extracting a random YouTube video.

Converting Youtube Video to American Sign Language Translation Using Convolution Neural Network and Video Processing

Sign language is a vital mode of communication for the deaf, comprising around 5% of the global population. Despite the availability of subtitles in various languages, YouTube lacks sign language-based subtitles. This study aims to develop American Sign Language (ASL) subtitles for YouTube videos. The proposed method involves a three-phase framework integrating deep learning-based Convolutional Neural Network (CNN) and image/video processing techniques. A torch-based CNN model achieved high accuracy in training and testing (99.982% and 98% respectively). The study demonstrates the practical application of the integrated method by extracting a random YouTube video.

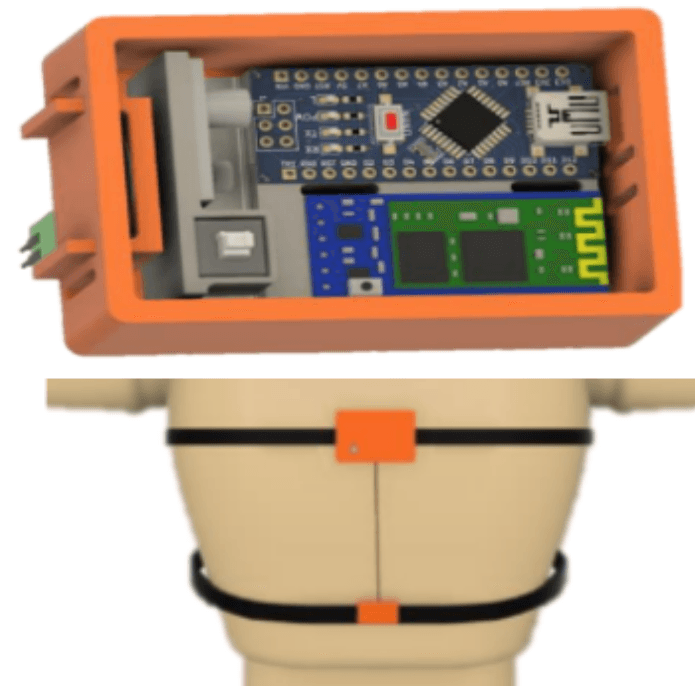

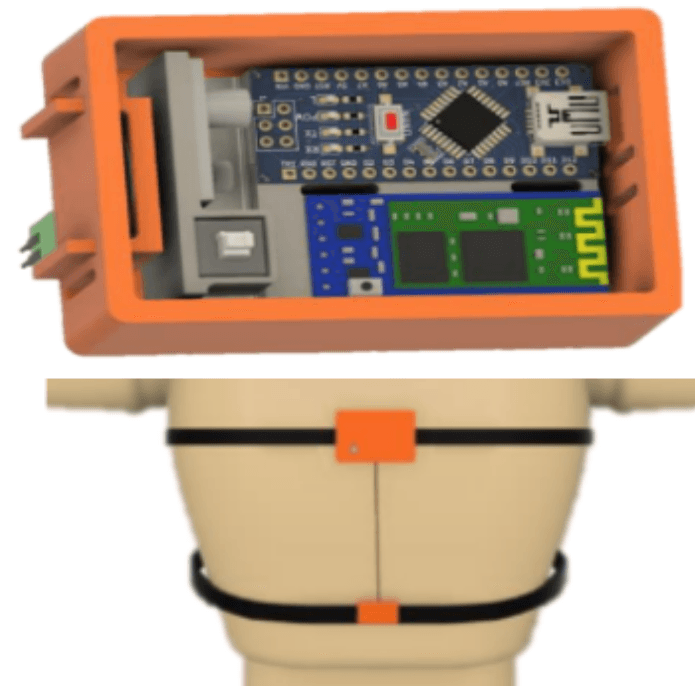

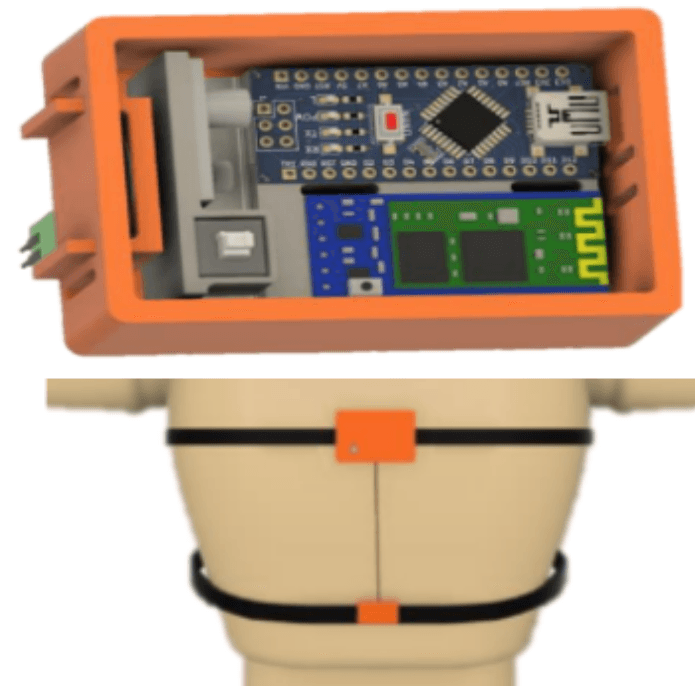

Breath Free – Screening stress and Anxiety from breathing pattern

The product aims to detect early stress and anxiety by measuring breathing patterns as a vital sign. It uses two 3D-printed box-like structures fitted with FSR sensors to measure chest and belly expansion in a non-invasive way. The wireless device connects to a computer via Bluetooth and sends data serially. A Python program collects the data and generates an A4-size infographic report on breathing, including chest and belly breathing patterns, consistency and flow, breathing rate, and the number of deep, shallow, and normal breaths. Testing revealed that the breathing patterns of working professionals in stressful jobs differ from those of school-going children. The device was also tested for gaming activities to assess excitement and stress, which can affect breathing. The product can potentially help control stress and anxiety by monitoring breathing patterns and implementing proper physical practices.

Breath Free – Screening stress and Anxiety from breathing pattern

The product aims to detect early stress and anxiety by measuring breathing patterns as a vital sign. It uses two 3D-printed box-like structures fitted with FSR sensors to measure chest and belly expansion in a non-invasive way. The wireless device connects to a computer via Bluetooth and sends data serially. A Python program collects the data and generates an A4-size infographic report on breathing, including chest and belly breathing patterns, consistency and flow, breathing rate, and the number of deep, shallow, and normal breaths. Testing revealed that the breathing patterns of working professionals in stressful jobs differ from those of school-going children. The device was also tested for gaming activities to assess excitement and stress, which can affect breathing. The product can potentially help control stress and anxiety by monitoring breathing patterns and implementing proper physical practices.

Breath Free – Screening stress and Anxiety from breathing pattern

The product aims to detect early stress and anxiety by measuring breathing patterns as a vital sign. It uses two 3D-printed box-like structures fitted with FSR sensors to measure chest and belly expansion in a non-invasive way. The wireless device connects to a computer via Bluetooth and sends data serially. A Python program collects the data and generates an A4-size infographic report on breathing, including chest and belly breathing patterns, consistency and flow, breathing rate, and the number of deep, shallow, and normal breaths. Testing revealed that the breathing patterns of working professionals in stressful jobs differ from those of school-going children. The device was also tested for gaming activities to assess excitement and stress, which can affect breathing. The product can potentially help control stress and anxiety by monitoring breathing patterns and implementing proper physical practices.

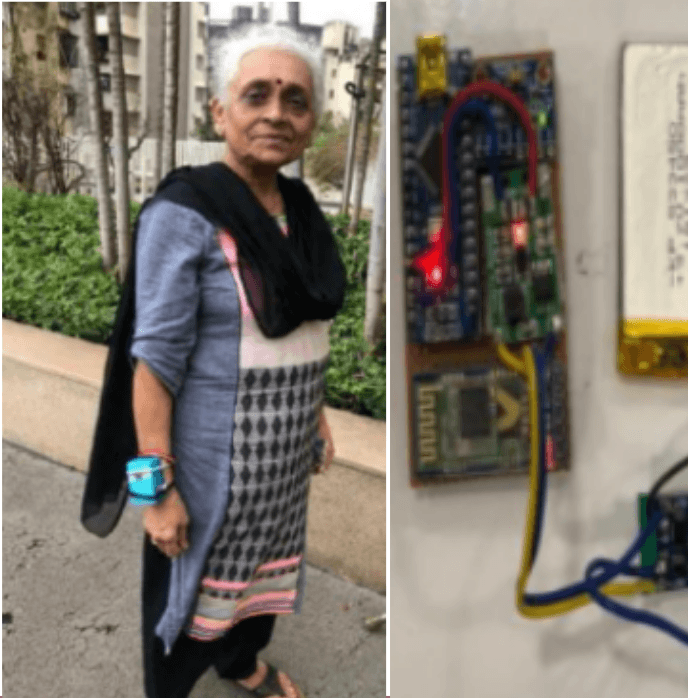

SAATHI – Your Fitness Companion

Our project addresses the lack of regular physical exercise among the elderly by providing a simple and social solution. Through our research, we discovered that many seniors struggle with technology but prefer exercising in groups. To tackle this, we developed an exercise wristband and a mobile app that allows users to track their steps, set goals, and form exercise groups. The app is designed to be user-friendly with clear icons and minimal pages. By connecting with others in the app, users can find motivation and companionship, encouraging them to stay active.

SAATHI – Your Fitness Companion

Our project addresses the lack of regular physical exercise among the elderly by providing a simple and social solution. Through our research, we discovered that many seniors struggle with technology but prefer exercising in groups. To tackle this, we developed an exercise wristband and a mobile app that allows users to track their steps, set goals, and form exercise groups. The app is designed to be user-friendly with clear icons and minimal pages. By connecting with others in the app, users can find motivation and companionship, encouraging them to stay active.

SAATHI – Your Fitness Companion

Our project addresses the lack of regular physical exercise among the elderly by providing a simple and social solution. Through our research, we discovered that many seniors struggle with technology but prefer exercising in groups. To tackle this, we developed an exercise wristband and a mobile app that allows users to track their steps, set goals, and form exercise groups. The app is designed to be user-friendly with clear icons and minimal pages. By connecting with others in the app, users can find motivation and companionship, encouraging them to stay active.

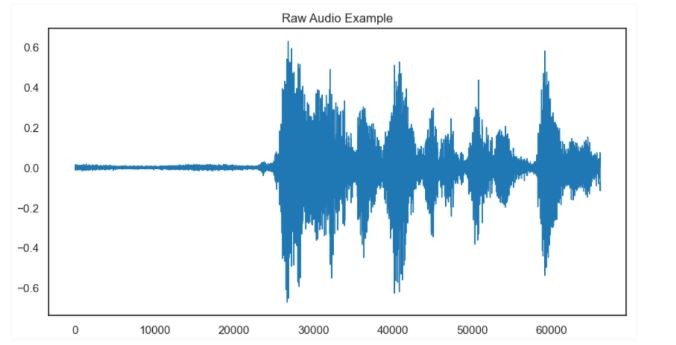

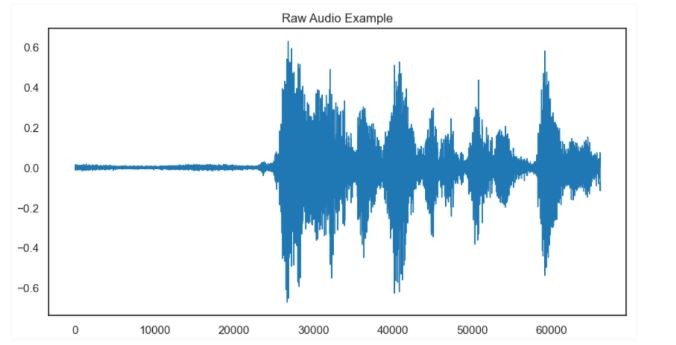

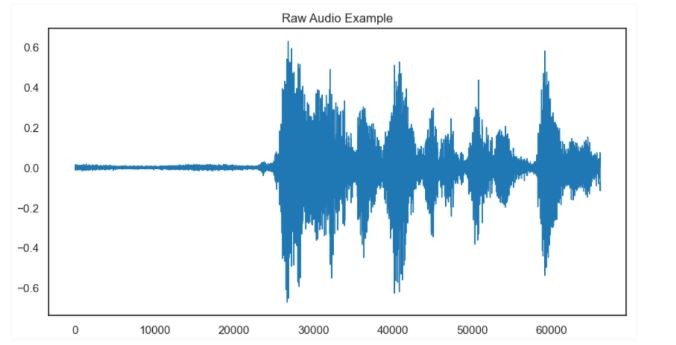

An Artificial Intelligence Integrated Technique for Screening

Neurological Diseases with Sound as a Biomarker

This project aims to develop a machine learning-based system for detecting neurological disorders like Parkinson's disease (PD) and paralysis through voice analysis. Researchers recorded speech samples from patients and healthy individuals, extracting features using Mel-frequency cepstral coefficients (MFCC). K-Nearest Neighbors (kNN) and Convolutional Neural Networks (CNN) models were trained on this dataset to classify voices into PD, paralysis, or normal categories. With an overall accuracy rate comparable to human laryngologists (60.1% and 56.1%), this system can assist clinicians in accurately identifying the presence and stage of neurological disorders, enabling appropriate treatment recommendations through therapy, medication, or a combination thereof

An Artificial Intelligence Integrated Technique for Screening

Neurological Diseases with Sound as a Biomarker

This project aims to develop a machine learning-based system for detecting neurological disorders like Parkinson's disease (PD) and paralysis through voice analysis. Researchers recorded speech samples from patients and healthy individuals, extracting features using Mel-frequency cepstral coefficients (MFCC). K-Nearest Neighbors (kNN) and Convolutional Neural Networks (CNN) models were trained on this dataset to classify voices into PD, paralysis, or normal categories. With an overall accuracy rate comparable to human laryngologists (60.1% and 56.1%), this system can assist clinicians in accurately identifying the presence and stage of neurological disorders, enabling appropriate treatment recommendations through therapy, medication, or a combination thereof

An Artificial Intelligence Integrated Technique for Screening

Neurological Diseases with Sound as a Biomarker

This project aims to develop a machine learning-based system for detecting neurological disorders like Parkinson's disease (PD) and paralysis through voice analysis. Researchers recorded speech samples from patients and healthy individuals, extracting features using Mel-frequency cepstral coefficients (MFCC). K-Nearest Neighbors (kNN) and Convolutional Neural Networks (CNN) models were trained on this dataset to classify voices into PD, paralysis, or normal categories. With an overall accuracy rate comparable to human laryngologists (60.1% and 56.1%), this system can assist clinicians in accurately identifying the presence and stage of neurological disorders, enabling appropriate treatment recommendations through therapy, medication, or a combination thereof

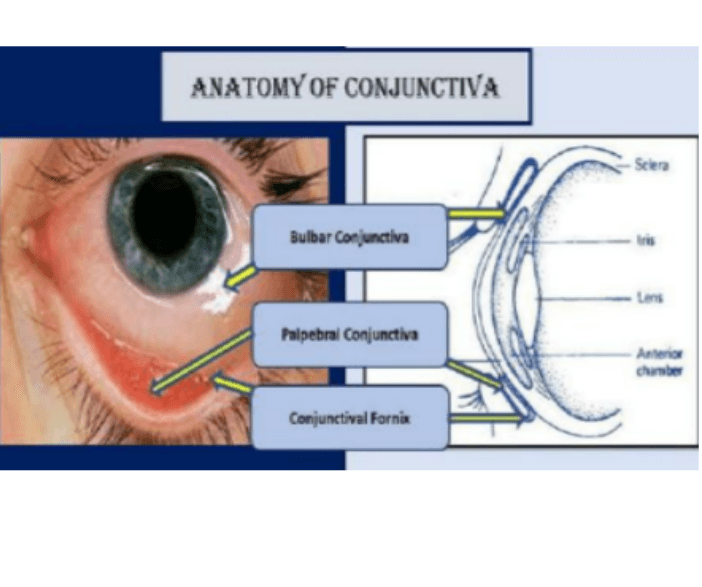

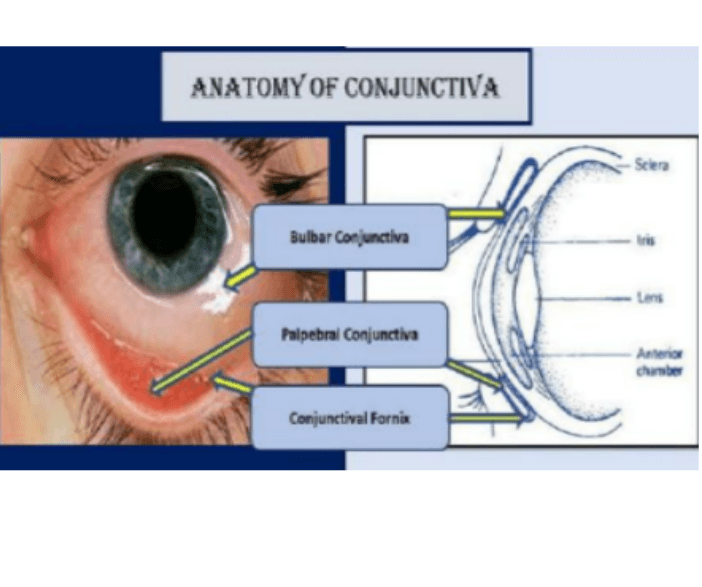

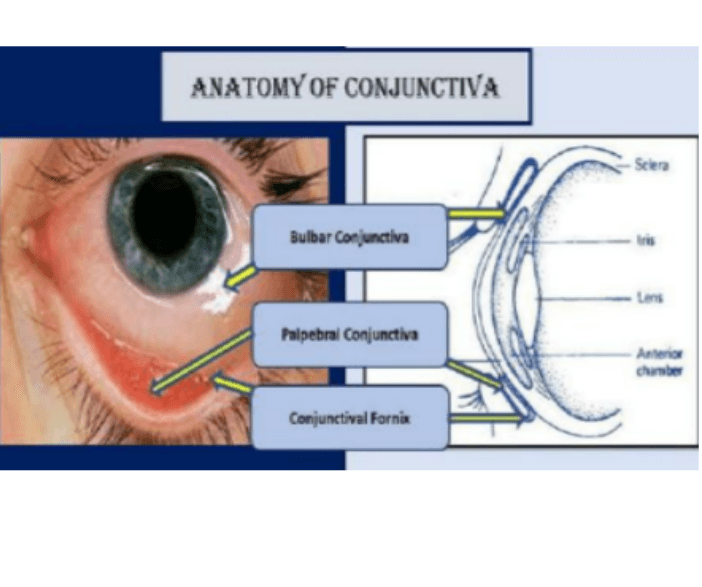

An Autonomous way to detect and quantify Cataracts using Computer Vision

The project focuses on detecting cataracts using Python, aiming to address limitations in current detection methods. Cataracts, a leading cause of blindness in older individuals, pose challenges for diagnosis, especially in rural areas with limited access to ophthalmologists. To overcome these challenges, we developed a program using Python libraries such as OpenCV, NumPy, and FPDF. This program analyzes patient information and eye images to generate a PDF report indicating the presence and severity of cataracts. By creating color masks and incorporating range checks, our program accurately detects cataracts and provides essential information for quantifying the severity of the condition. This solution facilitates early detection and intervention by providing doctors with comprehensive reports for efficient diagnosis.

An Autonomous way to detect and quantify Cataracts using Computer Vision

The project focuses on detecting cataracts using Python, aiming to address limitations in current detection methods. Cataracts, a leading cause of blindness in older individuals, pose challenges for diagnosis, especially in rural areas with limited access to ophthalmologists. To overcome these challenges, we developed a program using Python libraries such as OpenCV, NumPy, and FPDF. This program analyzes patient information and eye images to generate a PDF report indicating the presence and severity of cataracts. By creating color masks and incorporating range checks, our program accurately detects cataracts and provides essential information for quantifying the severity of the condition. This solution facilitates early detection and intervention by providing doctors with comprehensive reports for efficient diagnosis.

An Autonomous way to detect and quantify Cataracts using Computer Vision

The project focuses on detecting cataracts using Python, aiming to address limitations in current detection methods. Cataracts, a leading cause of blindness in older individuals, pose challenges for diagnosis, especially in rural areas with limited access to ophthalmologists. To overcome these challenges, we developed a program using Python libraries such as OpenCV, NumPy, and FPDF. This program analyzes patient information and eye images to generate a PDF report indicating the presence and severity of cataracts. By creating color masks and incorporating range checks, our program accurately detects cataracts and provides essential information for quantifying the severity of the condition. This solution facilitates early detection and intervention by providing doctors with comprehensive reports for efficient diagnosis.

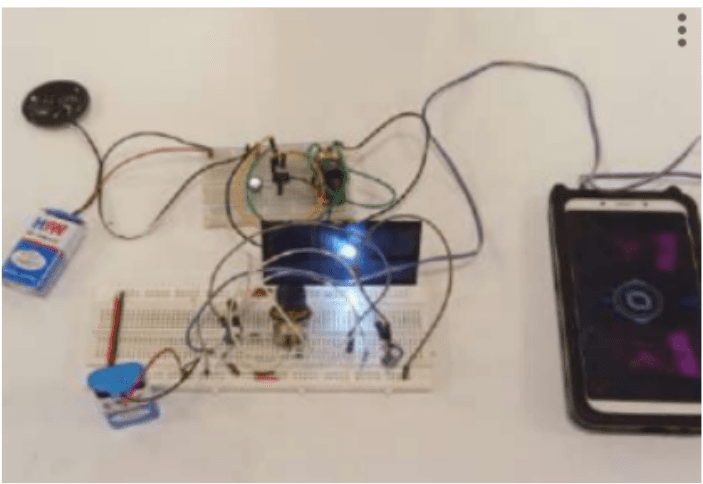

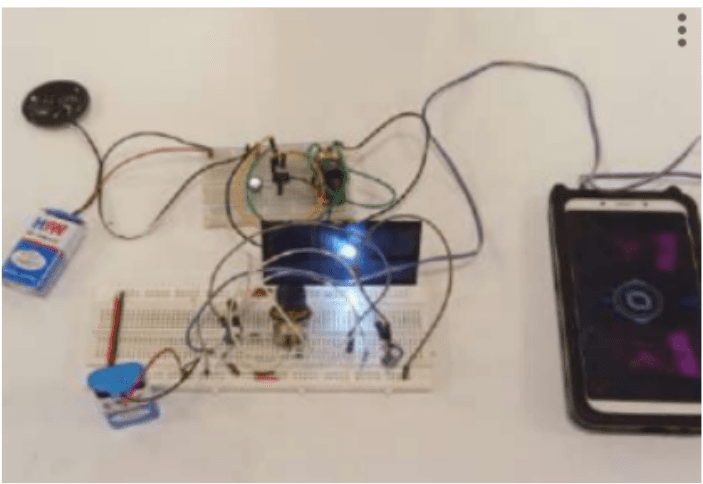

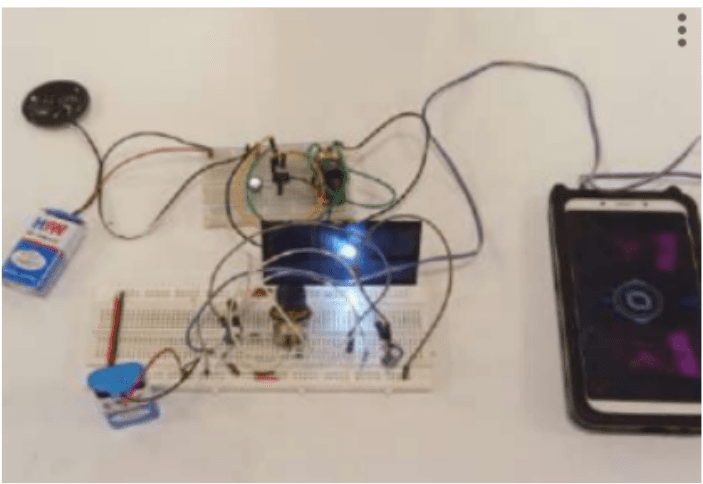

The Future of LiFi Technology to Transfer the Data

A Li-Fi network is a novel wireless technology that provides connection within a network. Li-Fi is an abbreviation for light-fidelity, which was proposed by German physicist Herald Haas. It transmits data via illumination by delivering data through an LED light bulb whose intensity fluctuates quicker than the human eye can follow. Practically LiFi is interference-free and safer than radio technology such as Wi-Fi or cellular networks. It involves the use of light instead of radio frequencies to transmit data. Radio frequency communication requires complex radio circuitry, antennas, and receivers, while LiFi is much simpler and uses direct modulation techniques.

The Future of LiFi Technology to Transfer the Data

A Li-Fi network is a novel wireless technology that provides connection within a network. Li-Fi is an abbreviation for light-fidelity, which was proposed by German physicist Herald Haas. It transmits data via illumination by delivering data through an LED light bulb whose intensity fluctuates quicker than the human eye can follow. Practically LiFi is interference-free and safer than radio technology such as Wi-Fi or cellular networks. It involves the use of light instead of radio frequencies to transmit data. Radio frequency communication requires complex radio circuitry, antennas, and receivers, while LiFi is much simpler and uses direct modulation techniques.

The Future of LiFi Technology to Transfer the Data

A Li-Fi network is a novel wireless technology that provides connection within a network. Li-Fi is an abbreviation for light-fidelity, which was proposed by German physicist Herald Haas. It transmits data via illumination by delivering data through an LED light bulb whose intensity fluctuates quicker than the human eye can follow. Practically LiFi is interference-free and safer than radio technology such as Wi-Fi or cellular networks. It involves the use of light instead of radio frequencies to transmit data. Radio frequency communication requires complex radio circuitry, antennas, and receivers, while LiFi is much simpler and uses direct modulation techniques.

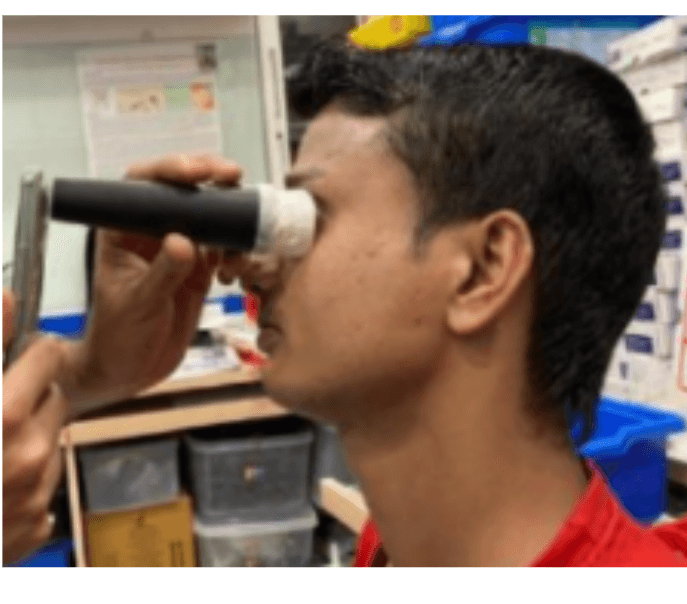

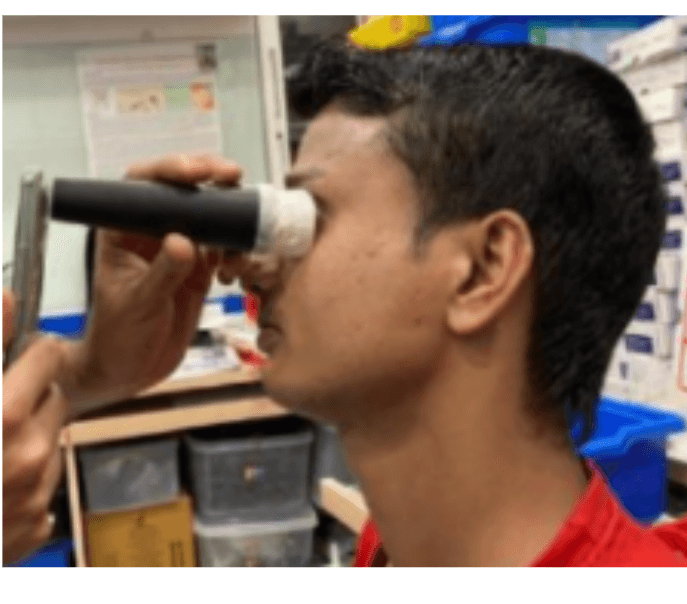

Novel Approach to Detect Anaemia Using Computer Vision and Machine Learning

While conducting workshops on menstrual health for economically disadvantaged girls in municipal schools, I observed common signs of iron deficiency anaemia, including dark circles under their eyes, stunted growth, frailty, pale palms, and persistent fatigue. Many were unaware of anaemia. Early detection is crucial, especially among children and women, to prevent health issues and ensure proper development. Traditional blood tests are impractical in resource-limited settings like India. To address this, Growth Gauge utilizes smartphone technology and machine learning to predict anaemia probability based on eye images. This cost-effective and accessible solution can support large-scale health programs, enhancing their effectiveness in combating anaemia.

Novel Approach to Detect Anaemia Using Computer Vision and Machine Learning

While conducting workshops on menstrual health for economically disadvantaged girls in municipal schools, I observed common signs of iron deficiency anaemia, including dark circles under their eyes, stunted growth, frailty, pale palms, and persistent fatigue. Many were unaware of anaemia. Early detection is crucial, especially among children and women, to prevent health issues and ensure proper development. Traditional blood tests are impractical in resource-limited settings like India. To address this, Growth Gauge utilizes smartphone technology and machine learning to predict anaemia probability based on eye images. This cost-effective and accessible solution can support large-scale health programs, enhancing their effectiveness in combating anaemia.

Novel Approach to Detect Anaemia Using Computer Vision and Machine Learning

While conducting workshops on menstrual health for economically disadvantaged girls in municipal schools, I observed common signs of iron deficiency anaemia, including dark circles under their eyes, stunted growth, frailty, pale palms, and persistent fatigue. Many were unaware of anaemia. Early detection is crucial, especially among children and women, to prevent health issues and ensure proper development. Traditional blood tests are impractical in resource-limited settings like India. To address this, Growth Gauge utilizes smartphone technology and machine learning to predict anaemia probability based on eye images. This cost-effective and accessible solution can support large-scale health programs, enhancing their effectiveness in combating anaemia.

Quantification of Microplastic in Domestic Greywater Using Image Processing and Machine Learning at Microscopic Level

The abstract discusses the issue of microplastics released from washing synthetic textiles, which contribute significantly to ocean pollution. It highlights the need for innovative methods to detect microplastics in wastewater. The aim of the project is to create a prototype for real-time monitoring of microplastics in greywater released from household washing machines. The prototype includes monitoring using a camera, analyzing and storing data in the cloud, and employing computer vision and machine learning techniques for microplastic detection in the images. It also includes a system for logging and monitoring microplastics in greywater. The prototype can be used by the government to regulate the amount and type of microplastics released by various types of clothes.

Quantification of Microplastic in Domestic Greywater Using Image Processing and Machine Learning at Microscopic Level

The abstract discusses the issue of microplastics released from washing synthetic textiles, which contribute significantly to ocean pollution. It highlights the need for innovative methods to detect microplastics in wastewater. The aim of the project is to create a prototype for real-time monitoring of microplastics in greywater released from household washing machines. The prototype includes monitoring using a camera, analyzing and storing data in the cloud, and employing computer vision and machine learning techniques for microplastic detection in the images. It also includes a system for logging and monitoring microplastics in greywater. The prototype can be used by the government to regulate the amount and type of microplastics released by various types of clothes.

Quantification of Microplastic in Domestic Greywater Using Image Processing and Machine Learning at Microscopic Level

The abstract discusses the issue of microplastics released from washing synthetic textiles, which contribute significantly to ocean pollution. It highlights the need for innovative methods to detect microplastics in wastewater. The aim of the project is to create a prototype for real-time monitoring of microplastics in greywater released from household washing machines. The prototype includes monitoring using a camera, analyzing and storing data in the cloud, and employing computer vision and machine learning techniques for microplastic detection in the images. It also includes a system for logging and monitoring microplastics in greywater. The prototype can be used by the government to regulate the amount and type of microplastics released by various types of clothes.

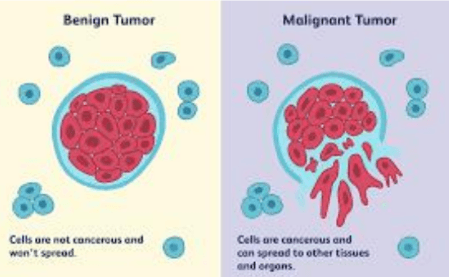

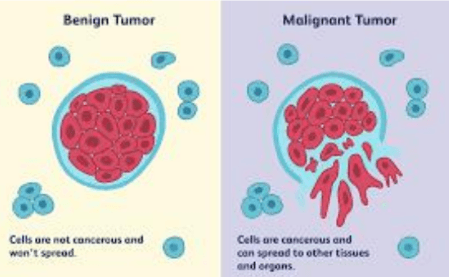

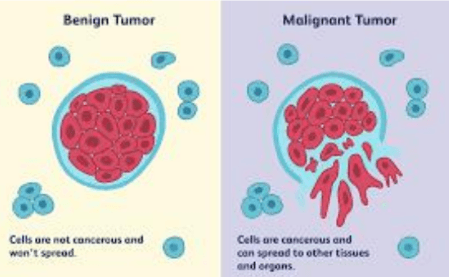

Creating a Haptic 4D Model Along With Machine Learning Analysis by Developing a Non- Invasive Pressure

Mapping Method to Screen for Genital Skin Cancer

Early detection of genital skin cancer is hindered by factors such as privacy concerns, social barriers, and discomfort. Traditional biopsy methods, though accurate, can cause pain and infection in the genital region. Our engineering objective is to develop a non-invasive screening method using machine learning. We created a mobile app that processes lesion images through a Deep Convolutional Neural Network (DCNN), achieving an 83% accuracy in classifying malignant lesions. Additionally, we employ a pressure mapping kit to create a 3D flexible printing file, providing tactile feedback for accurate diagnosis.

Creating a Haptic 4D Model Along With Machine Learning Analysis by Developing a Non- Invasive Pressure

Mapping Method to Screen for Genital Skin Cancer

Early detection of genital skin cancer is hindered by factors such as privacy concerns, social barriers, and discomfort. Traditional biopsy methods, though accurate, can cause pain and infection in the genital region. Our engineering objective is to develop a non-invasive screening method using machine learning. We created a mobile app that processes lesion images through a Deep Convolutional Neural Network (DCNN), achieving an 83% accuracy in classifying malignant lesions. Additionally, we employ a pressure mapping kit to create a 3D flexible printing file, providing tactile feedback for accurate diagnosis.

Creating a Haptic 4D Model Along With Machine Learning Analysis by Developing a Non- Invasive Pressure

Mapping Method to Screen for Genital Skin Cancer

Early detection of genital skin cancer is hindered by factors such as privacy concerns, social barriers, and discomfort. Traditional biopsy methods, though accurate, can cause pain and infection in the genital region. Our engineering objective is to develop a non-invasive screening method using machine learning. We created a mobile app that processes lesion images through a Deep Convolutional Neural Network (DCNN), achieving an 83% accuracy in classifying malignant lesions. Additionally, we employ a pressure mapping kit to create a 3D flexible printing file, providing tactile feedback for accurate diagnosis.

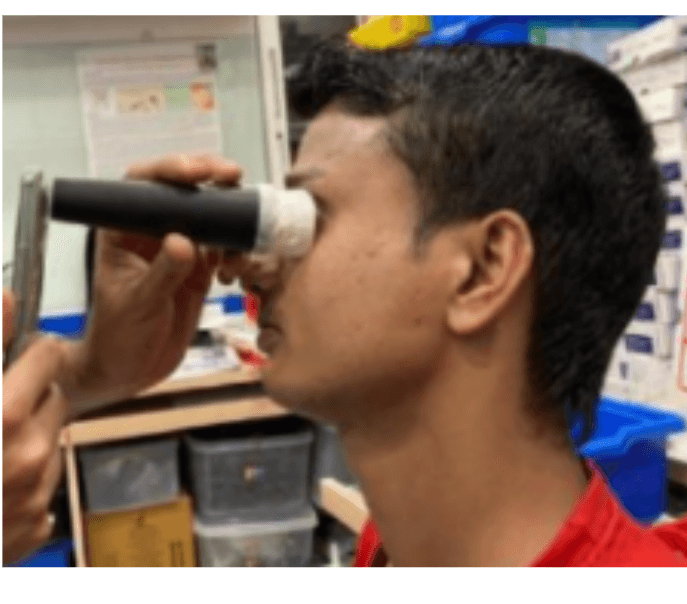

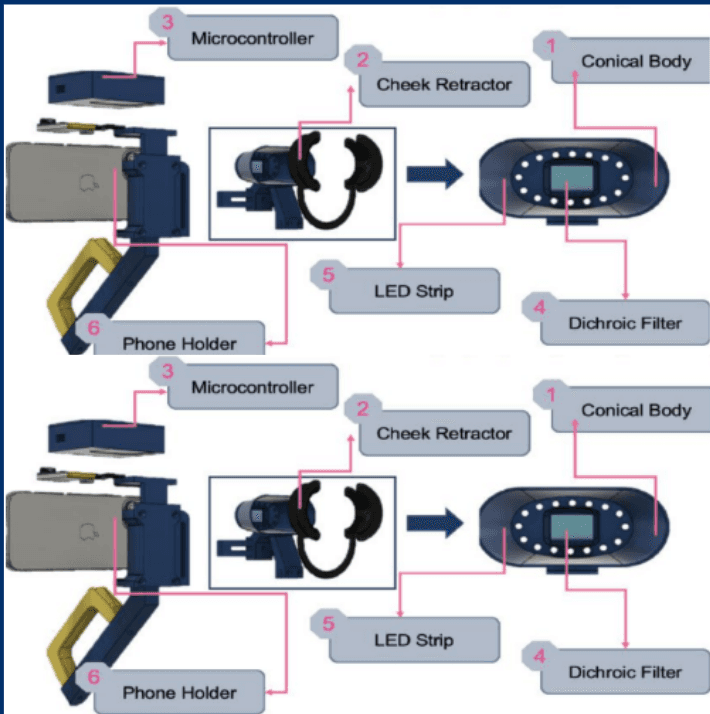

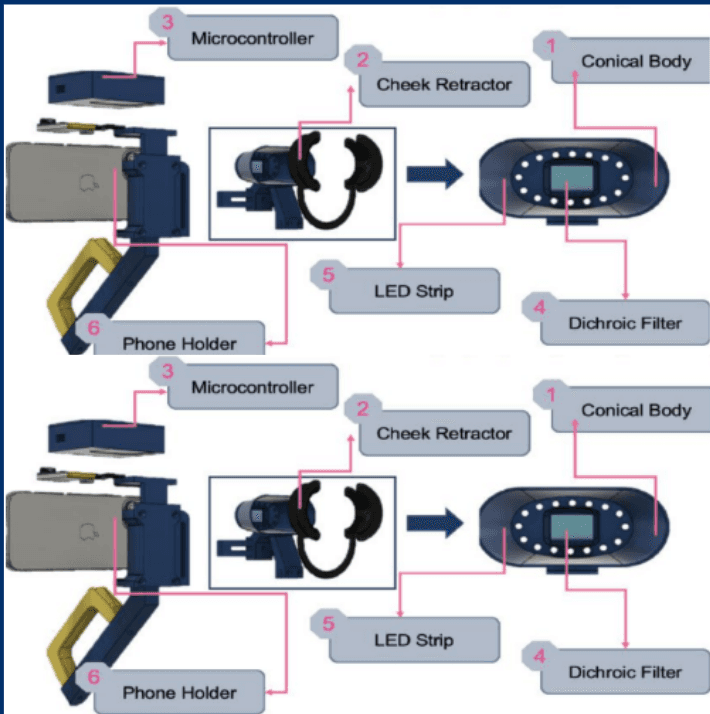

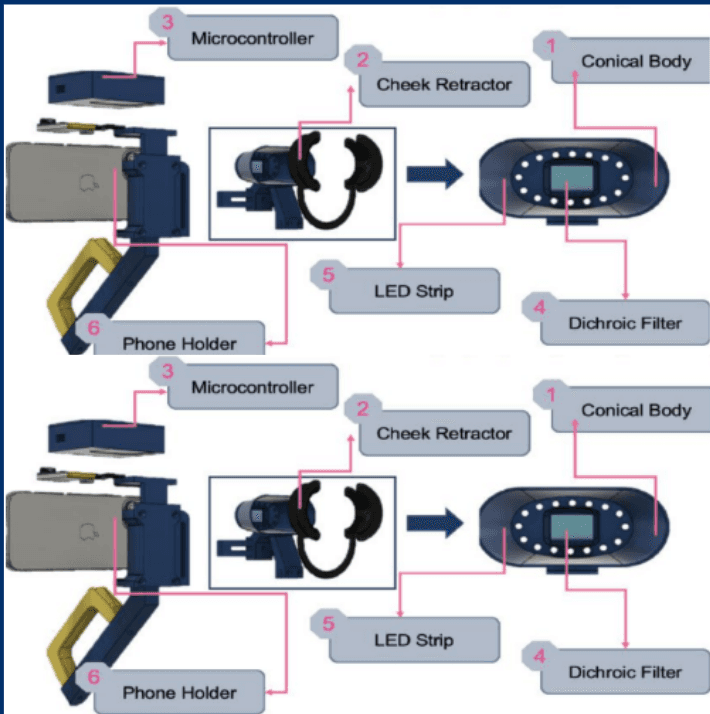

Mouthscope: Autonomous Detection of Oral Precancerous

Lesions By Fluorescent Imaging

Oral cancer (OC), highly treatable in early stages, is often diagnosed late in rural India due to lack of accessible screening. MouthScope uses AI to automate OC screening, making it more accessible. It scans the oral cavity for potentially cancerous lesions without professional intervention. The portable device works on auto-fluorescence principle, capturing color differences with a phone camera, which are analyzed by machine learning models (ResNet_v2 and YOLOv5) with 96% accuracy. It allows real-time self-detection and mass screening, enabling self-checkups. Tested on 24 patients, MouthScope clearly distinguished malignant from normal tissue. By using smartphones and machine learning, MouthScope eliminates the need for extensive infrastructure, making mass OC screening more attainable for rural India.

Mouthscope: Autonomous Detection of Oral Precancerous

Lesions By Fluorescent Imaging

Oral cancer (OC), highly treatable in early stages, is often diagnosed late in rural India due to lack of accessible screening. MouthScope uses AI to automate OC screening, making it more accessible. It scans the oral cavity for potentially cancerous lesions without professional intervention. The portable device works on auto-fluorescence principle, capturing color differences with a phone camera, which are analyzed by machine learning models (ResNet_v2 and YOLOv5) with 96% accuracy. It allows real-time self-detection and mass screening, enabling self-checkups. Tested on 24 patients, MouthScope clearly distinguished malignant from normal tissue. By using smartphones and machine learning, MouthScope eliminates the need for extensive infrastructure, making mass OC screening more attainable for rural India.

Mouthscope: Autonomous Detection of Oral Precancerous

Lesions By Fluorescent Imaging

Oral cancer (OC), highly treatable in early stages, is often diagnosed late in rural India due to lack of accessible screening. MouthScope uses AI to automate OC screening, making it more accessible. It scans the oral cavity for potentially cancerous lesions without professional intervention. The portable device works on auto-fluorescence principle, capturing color differences with a phone camera, which are analyzed by machine learning models (ResNet_v2 and YOLOv5) with 96% accuracy. It allows real-time self-detection and mass screening, enabling self-checkups. Tested on 24 patients, MouthScope clearly distinguished malignant from normal tissue. By using smartphones and machine learning, MouthScope eliminates the need for extensive infrastructure, making mass OC screening more attainable for rural India.

Find your nearest innovation lab

These awards reflect projects that pushed my boundaries, told deeper stories, and caught the attention of people who care about what visuals can say.

Find your nearest innovation lab

These awards reflect projects that pushed my boundaries, told deeper stories, and caught the attention of people who care about what visuals can say.