Psychology & AI

Pair human insight with AI to unlock behavior patterns, cognition, and mental-health solutions.

Psychology & AI

Pair human insight with AI to unlock behavior patterns, cognition, and mental-health solutions.

Psychology & AI

Pair human insight with AI to unlock behavior patterns, cognition, and mental-health solutions.

Psychology & AI

Pair human insight with AI to unlock behavior patterns, cognition, and mental-health solutions.

Intelligent deep-learning based app for Music therapy of autistic childrentballer Price Prediction

Abstract: This research study explores the creation of an intelligent application designed to support music therapy for children with autism, utilizing advanced deep learning techniques. The application, developed using the Tkinter Python library, serves as a web-based platform aimed at achieving seven essential therapeutic objectives. One of the primary features is the generation of personalized treatment plans using classification neural networks. These plans are customized according to the child's medical history, patterns of use, and trend analysis, ensuring a highly individualized therapeutic experience. The intuitive user interface ensures accessibility and engagement, while the integration of real-time feedback mechanisms supports ongoing therapeutic interventions. This research seeks to address the unique requirements of autistic children, fostering a supportive and adaptive therapeutic environment.

Intelligent deep-learning based app for Music therapy of autistic childrentballer Price Prediction

Abstract: This research study explores the creation of an intelligent application designed to support music therapy for children with autism, utilizing advanced deep learning techniques. The application, developed using the Tkinter Python library, serves as a web-based platform aimed at achieving seven essential therapeutic objectives. One of the primary features is the generation of personalized treatment plans using classification neural networks. These plans are customized according to the child's medical history, patterns of use, and trend analysis, ensuring a highly individualized therapeutic experience. The intuitive user interface ensures accessibility and engagement, while the integration of real-time feedback mechanisms supports ongoing therapeutic interventions. This research seeks to address the unique requirements of autistic children, fostering a supportive and adaptive therapeutic environment.

Intelligent deep-learning based app for Music therapy of autistic childrentballer Price Prediction

Abstract: This research study explores the creation of an intelligent application designed to support music therapy for children with autism, utilizing advanced deep learning techniques. The application, developed using the Tkinter Python library, serves as a web-based platform aimed at achieving seven essential therapeutic objectives. One of the primary features is the generation of personalized treatment plans using classification neural networks. These plans are customized according to the child's medical history, patterns of use, and trend analysis, ensuring a highly individualized therapeutic experience. The intuitive user interface ensures accessibility and engagement, while the integration of real-time feedback mechanisms supports ongoing therapeutic interventions. This research seeks to address the unique requirements of autistic children, fostering a supportive and adaptive therapeutic environment.

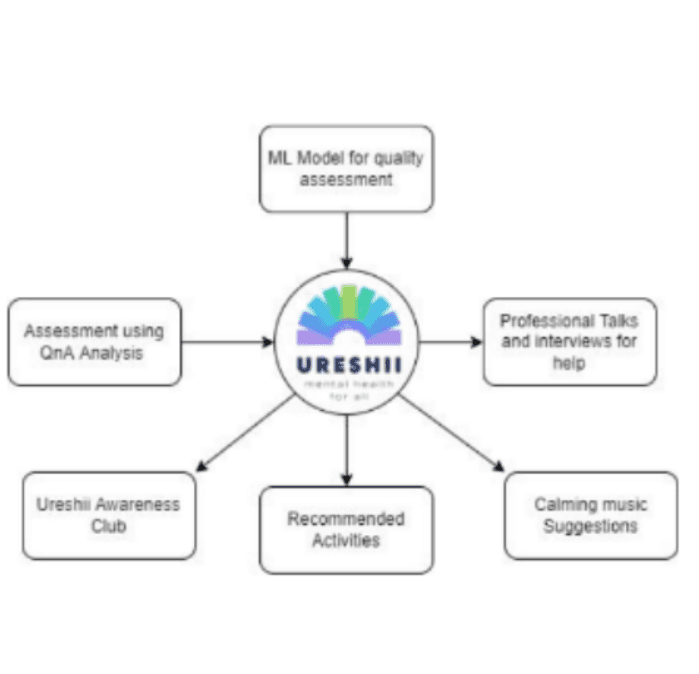

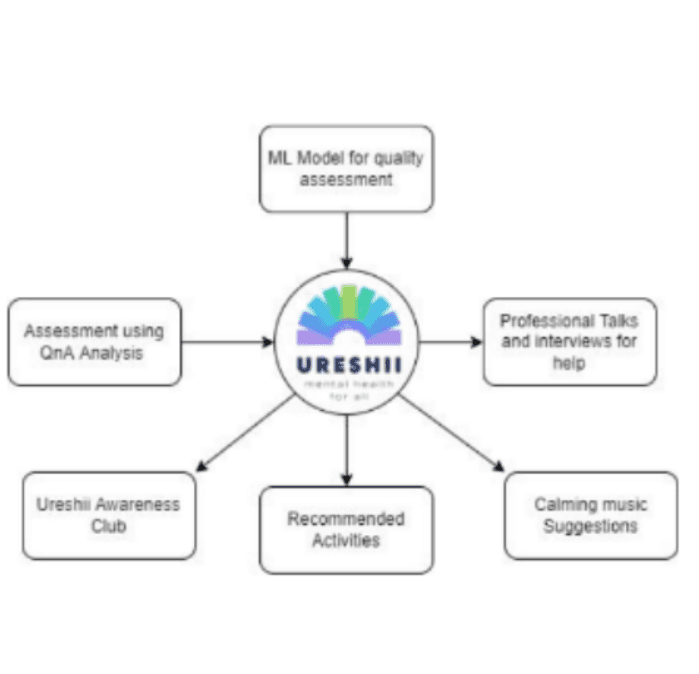

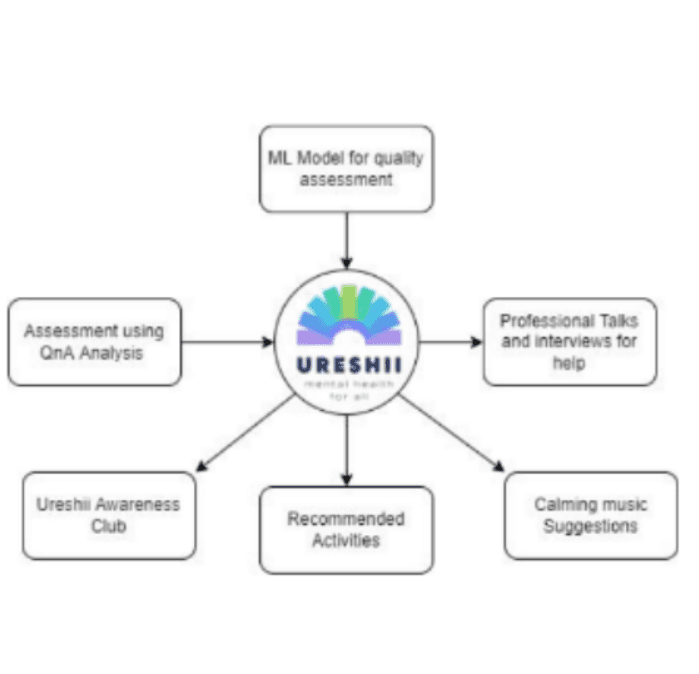

URESHII- Improving one’s Mental Wellbeing

"Ureshii" is a mental health app that leverages machine learning technology and behavioral activation therapy to provide personalized recommendations for improving users' well-being. The app starts by assessing the user's dopamine, serotonin, oxytocin, and endorphin levels through a daily 5-question survey based on the PHQ-9 test. Using a "k-nearest neighbors" clustering algorithm, the app groups users with similar profiles and hormonal score differences. It then employs a recommender system to suggest activities that have been shown to positively impact the mental health of users within the same cluster. For example, if one user finds dancing rewarding, the app will recommend similar activities to another user with a comparable hormonal profile. The app continuously learns from user responses and adjusts its recommendations and cluster assignments accordingly.

URESHII- Improving one’s Mental Wellbeing

"Ureshii" is a mental health app that leverages machine learning technology and behavioral activation therapy to provide personalized recommendations for improving users' well-being. The app starts by assessing the user's dopamine, serotonin, oxytocin, and endorphin levels through a daily 5-question survey based on the PHQ-9 test. Using a "k-nearest neighbors" clustering algorithm, the app groups users with similar profiles and hormonal score differences. It then employs a recommender system to suggest activities that have been shown to positively impact the mental health of users within the same cluster. For example, if one user finds dancing rewarding, the app will recommend similar activities to another user with a comparable hormonal profile. The app continuously learns from user responses and adjusts its recommendations and cluster assignments accordingly.

URESHII- Improving one’s Mental Wellbeing

The research presented here looks into the vibration properties of 3D-printed airless tires, which have the potential to revolutionize tire design and transportation efficiency. Through extensive experimentation and vibration research, three distinct tire constructions were investigated. Because of its good damping and deformation qualities, thermoplastic polyurethane (TPU) was chosen as the 3D printing material. The experimental arrangement was designed to simulate real-world road conditions, and an MPU6050 sensor captured tire vibrations in three axes. The vibrational properties of the tire structures were revealed using Fast Fourier Transform (FFT) analysis, allowing for a comparative assessment of their stability. Structure 1 was found to be the most vibration-stable, followed by Structures 3 and 2.

A Machine Learning Approach for Scalable Early-Age Dyslexia Detection Correlating Phonological Speech Analysis and Eyeball Tracking

Dyslexia is a complex reading disability that can be challenging to detect early on due to its unique presentation in individuals. Despite lacking a precise understanding of its causes, it's evident that many struggle with reading without a clear cause. To address this issue, our solution employs a novel approach combining eyeball tracking and speech recognition technologies. By analyzing eye movements and speech patterns, our system aims to provide early and accurate predictions of dyslexia, facilitating timely intervention.

A Machine Learning Approach for Scalable Early-Age Dyslexia Detection Correlating Phonological Speech Analysis and Eyeball Tracking

Dyslexia is a complex reading disability that can be challenging to detect early on due to its unique presentation in individuals. Despite lacking a precise understanding of its causes, it's evident that many struggle with reading without a clear cause. To address this issue, our solution employs a novel approach combining eyeball tracking and speech recognition technologies. By analyzing eye movements and speech patterns, our system aims to provide early and accurate predictions of dyslexia, facilitating timely intervention.

A Machine Learning Approach for Scalable Early-Age Dyslexia Detection Correlating Phonological Speech Analysis and Eyeball Tracking

Dyslexia is a complex reading disability that can be challenging to detect early on due to its unique presentation in individuals. Despite lacking a precise understanding of its causes, it's evident that many struggle with reading without a clear cause. To address this issue, our solution employs a novel approach combining eyeball tracking and speech recognition technologies. By analyzing eye movements and speech patterns, our system aims to provide early and accurate predictions of dyslexia, facilitating timely intervention.

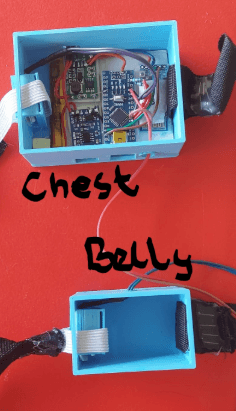

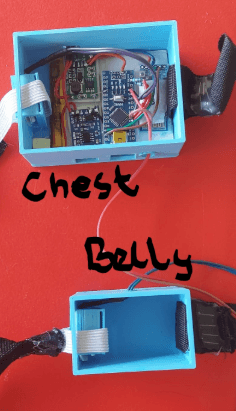

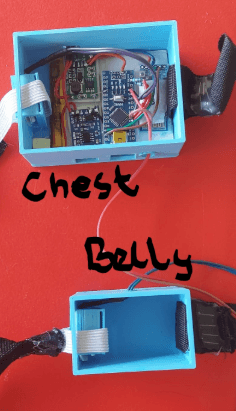

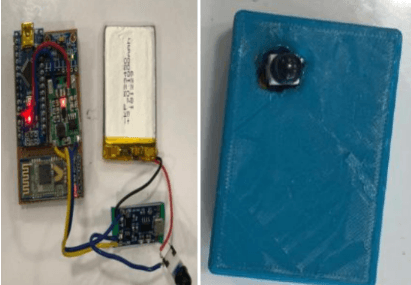

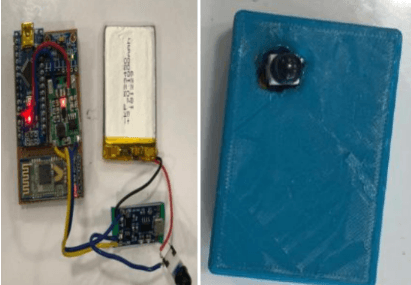

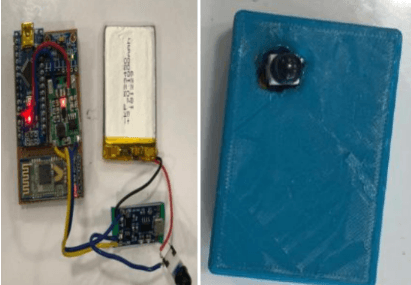

Breath Free – Screening stress and Anxiety from breathing pattern

The product aims to detect early stress and anxiety by measuring breathing patterns as a vital sign. It uses two 3D-printed box-like structures fitted with FSR sensors to measure chest and belly expansion in a non-invasive way. The wireless device connects to a computer via Bluetooth and sends data serially. A Python program collects the data and generates an A4-size infographic report on breathing, including chest and belly breathing patterns, consistency and flow, breathing rate, and the number of deep, shallow, and normal breaths. Testing revealed that the breathing patterns of working professionals in stressful jobs differ from those of school-going children. The device was also tested for gaming activities to assess excitement and stress, which can affect breathing. The product can potentially help control stress and anxiety by monitoring breathing patterns and implementing proper physical practices.

Breath Free – Screening stress and Anxiety from breathing pattern

The product aims to detect early stress and anxiety by measuring breathing patterns as a vital sign. It uses two 3D-printed box-like structures fitted with FSR sensors to measure chest and belly expansion in a non-invasive way. The wireless device connects to a computer via Bluetooth and sends data serially. A Python program collects the data and generates an A4-size infographic report on breathing, including chest and belly breathing patterns, consistency and flow, breathing rate, and the number of deep, shallow, and normal breaths. Testing revealed that the breathing patterns of working professionals in stressful jobs differ from those of school-going children. The device was also tested for gaming activities to assess excitement and stress, which can affect breathing. The product can potentially help control stress and anxiety by monitoring breathing patterns and implementing proper physical practices.

Breath Free – Screening stress and Anxiety from breathing pattern

The product aims to detect early stress and anxiety by measuring breathing patterns as a vital sign. It uses two 3D-printed box-like structures fitted with FSR sensors to measure chest and belly expansion in a non-invasive way. The wireless device connects to a computer via Bluetooth and sends data serially. A Python program collects the data and generates an A4-size infographic report on breathing, including chest and belly breathing patterns, consistency and flow, breathing rate, and the number of deep, shallow, and normal breaths. Testing revealed that the breathing patterns of working professionals in stressful jobs differ from those of school-going children. The device was also tested for gaming activities to assess excitement and stress, which can affect breathing. The product can potentially help control stress and anxiety by monitoring breathing patterns and implementing proper physical practices.

Hybrid Machine Learning Applications in Classifying the Sentiment by Analyzing the Political Tweets

The study proposes a hybrid machine learning algorithm combining natural language processing (NLP) and long short-term memory (LSTM) for sentiment analysis of political tweets. The NLP part extracts keywords summarizing the tweets and correlates them with positive or negative sentiments, while the LSTM part classifies the keywords into positive or negative sentiments. The model was applied to a dataset of 1.6 million political tweets and achieved 78% accuracy, 0.456 loss, 79% precision, and 0.78 F1 score. The aim is to analyze public sentiment from tweets to detect early crises and strategize political moves.

Hybrid Machine Learning Applications in Classifying the Sentiment by Analyzing the Political Tweets

The study proposes a hybrid machine learning algorithm combining natural language processing (NLP) and long short-term memory (LSTM) for sentiment analysis of political tweets. The NLP part extracts keywords summarizing the tweets and correlates them with positive or negative sentiments, while the LSTM part classifies the keywords into positive or negative sentiments. The model was applied to a dataset of 1.6 million political tweets and achieved 78% accuracy, 0.456 loss, 79% precision, and 0.78 F1 score. The aim is to analyze public sentiment from tweets to detect early crises and strategize political moves.

Hybrid Machine Learning Applications in Classifying the Sentiment by Analyzing the Political Tweets

The study proposes a hybrid machine learning algorithm combining natural language processing (NLP) and long short-term memory (LSTM) for sentiment analysis of political tweets. The NLP part extracts keywords summarizing the tweets and correlates them with positive or negative sentiments, while the LSTM part classifies the keywords into positive or negative sentiments. The model was applied to a dataset of 1.6 million political tweets and achieved 78% accuracy, 0.456 loss, 79% precision, and 0.78 F1 score. The aim is to analyze public sentiment from tweets to detect early crises and strategize political moves.

SAATHI – Your Fitness Companion

Our project addresses the lack of regular physical exercise among the elderly by providing a simple and social solution. Through our research, we discovered that many seniors struggle with technology but prefer exercising in groups. To tackle this, we developed an exercise wristband and a mobile app that allows users to track their steps, set goals, and form exercise groups. The app is designed to be user-friendly with clear icons and minimal pages. By connecting with others in the app, users can find motivation and companionship, encouraging them to stay active.

SAATHI – Your Fitness Companion

Our project addresses the lack of regular physical exercise among the elderly by providing a simple and social solution. Through our research, we discovered that many seniors struggle with technology but prefer exercising in groups. To tackle this, we developed an exercise wristband and a mobile app that allows users to track their steps, set goals, and form exercise groups. The app is designed to be user-friendly with clear icons and minimal pages. By connecting with others in the app, users can find motivation and companionship, encouraging them to stay active.

SAATHI – Your Fitness Companion

Our project addresses the lack of regular physical exercise among the elderly by providing a simple and social solution. Through our research, we discovered that many seniors struggle with technology but prefer exercising in groups. To tackle this, we developed an exercise wristband and a mobile app that allows users to track their steps, set goals, and form exercise groups. The app is designed to be user-friendly with clear icons and minimal pages. By connecting with others in the app, users can find motivation and companionship, encouraging them to stay active.

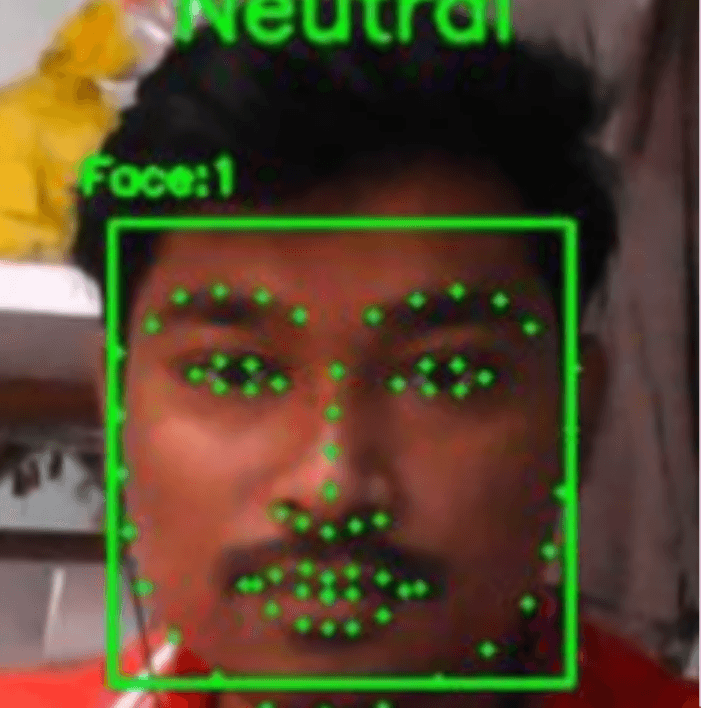

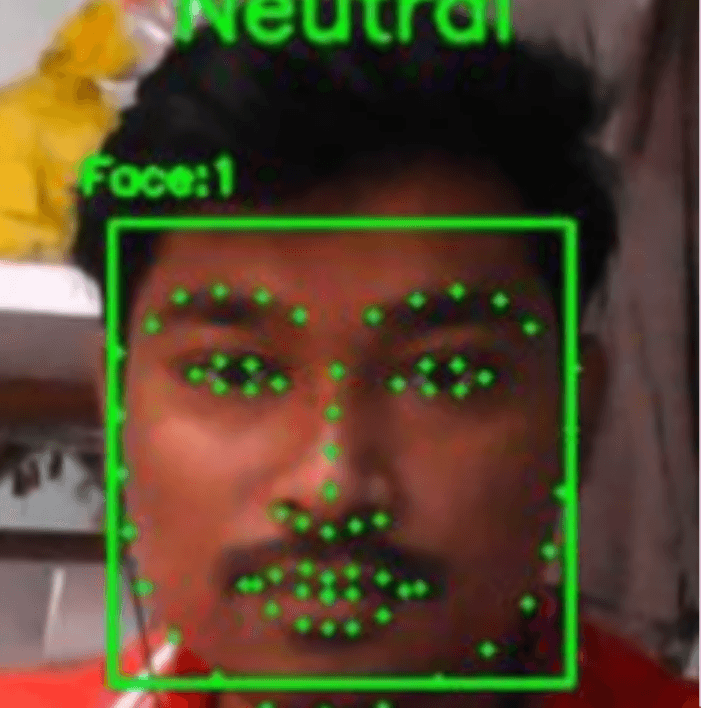

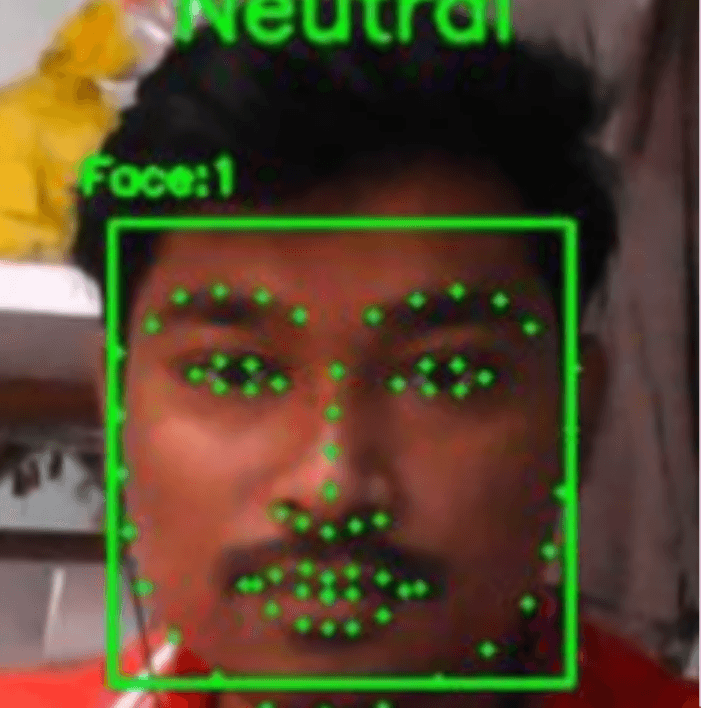

Deep Learning and Computer Vision-Based Attentiveness Tracking for Autistic Students in Online Learning Environments

Individuals with Autism Spectrum Disorder (ASD) often struggle with attention, which has been exacerbated by the shift to virtual learning during the COVID-19 pandemic. This paper presents 'Panacea,' a deep learning software designed to track the attentiveness of autistic students during online lessons. By analyzing facial expressions, orientation, and pupil movement, Panacea provides real-time data to instructors, helping them gauge student concentration levels. The system achieves 97% accuracy by integrating machine learning to detect emotions and employs a probability density function to classify attentiveness.

Deep Learning and Computer Vision-Based Attentiveness Tracking for Autistic Students in Online Learning Environments

Individuals with Autism Spectrum Disorder (ASD) often struggle with attention, which has been exacerbated by the shift to virtual learning during the COVID-19 pandemic. This paper presents 'Panacea,' a deep learning software designed to track the attentiveness of autistic students during online lessons. By analyzing facial expressions, orientation, and pupil movement, Panacea provides real-time data to instructors, helping them gauge student concentration levels. The system achieves 97% accuracy by integrating machine learning to detect emotions and employs a probability density function to classify attentiveness.

Deep Learning and Computer Vision-Based Attentiveness Tracking for Autistic Students in Online Learning Environments

Individuals with Autism Spectrum Disorder (ASD) often struggle with attention, which has been exacerbated by the shift to virtual learning during the COVID-19 pandemic. This paper presents 'Panacea,' a deep learning software designed to track the attentiveness of autistic students during online lessons. By analyzing facial expressions, orientation, and pupil movement, Panacea provides real-time data to instructors, helping them gauge student concentration levels. The system achieves 97% accuracy by integrating machine learning to detect emotions and employs a probability density function to classify attentiveness.

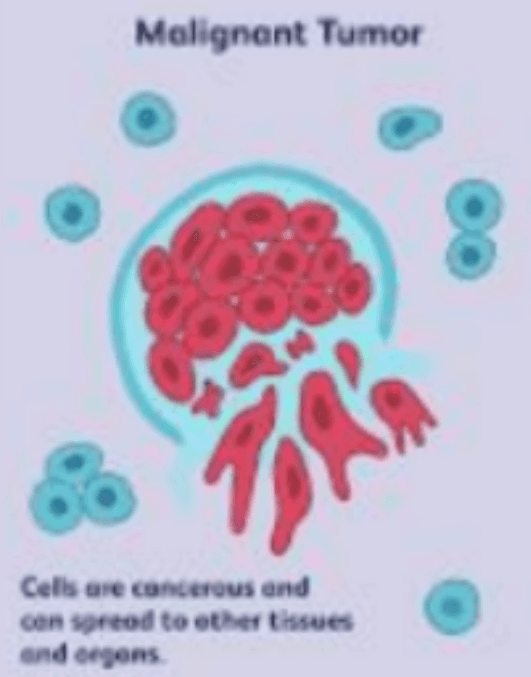

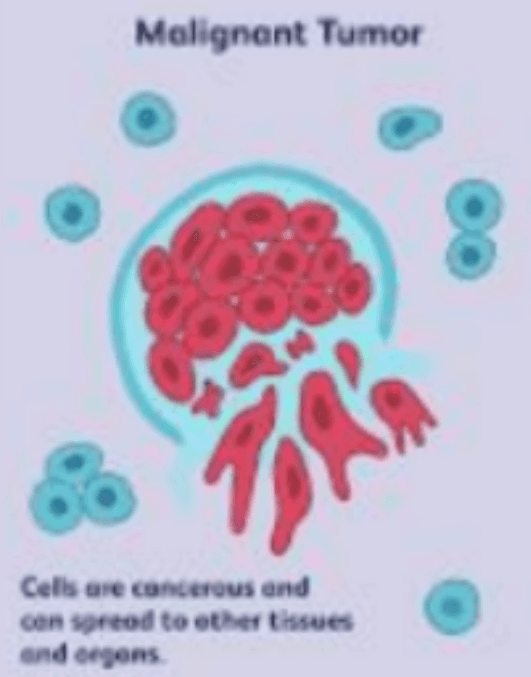

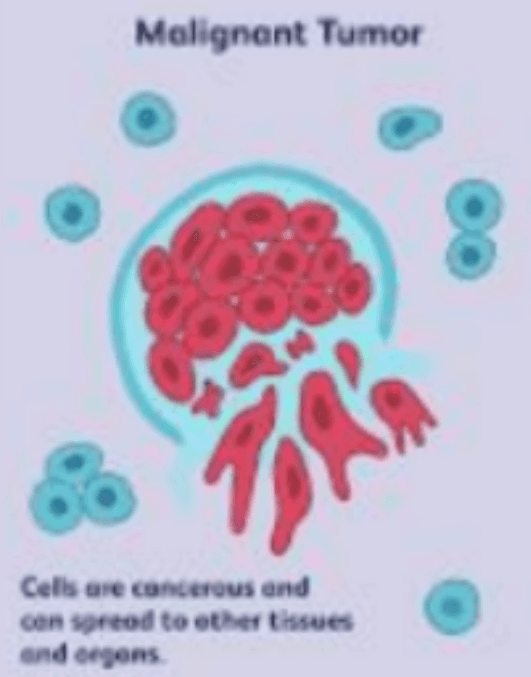

CancerEase: A New Approach Towards Early Prediction of Breast Cancer Using MRI Scan Data.

Breast cancer is a significant health concern, affecting 1 in 8 women and posing challenges for healthcare systems. To aid in early diagnosis, we present CancerEase, a machine learning software that uses MRI scan data to classify breast cancer as malignant or benign. CancerEase employs three models: Neural Networks, Logistic Regression, and K-Nearest Neighbors Classifier, and selects the most accurate model for diagnosis. Trained on Kaggle's MRI data contributed by professionals, the Neural Network Model achieved the highest accuracy of approximately 92.98%. CancerEase shows promising results as an effective tool for tackling the global breast cancer problem.

CancerEase: A New Approach Towards Early Prediction of Breast Cancer Using MRI Scan Data.

Breast cancer is a significant health concern, affecting 1 in 8 women and posing challenges for healthcare systems. To aid in early diagnosis, we present CancerEase, a machine learning software that uses MRI scan data to classify breast cancer as malignant or benign. CancerEase employs three models: Neural Networks, Logistic Regression, and K-Nearest Neighbors Classifier, and selects the most accurate model for diagnosis. Trained on Kaggle's MRI data contributed by professionals, the Neural Network Model achieved the highest accuracy of approximately 92.98%. CancerEase shows promising results as an effective tool for tackling the global breast cancer problem.

CancerEase: A New Approach Towards Early Prediction of Breast Cancer Using MRI Scan Data.

Breast cancer is a significant health concern, affecting 1 in 8 women and posing challenges for healthcare systems. To aid in early diagnosis, we present CancerEase, a machine learning software that uses MRI scan data to classify breast cancer as malignant or benign. CancerEase employs three models: Neural Networks, Logistic Regression, and K-Nearest Neighbors Classifier, and selects the most accurate model for diagnosis. Trained on Kaggle's MRI data contributed by professionals, the Neural Network Model achieved the highest accuracy of approximately 92.98%. CancerEase shows promising results as an effective tool for tackling the global breast cancer problem.

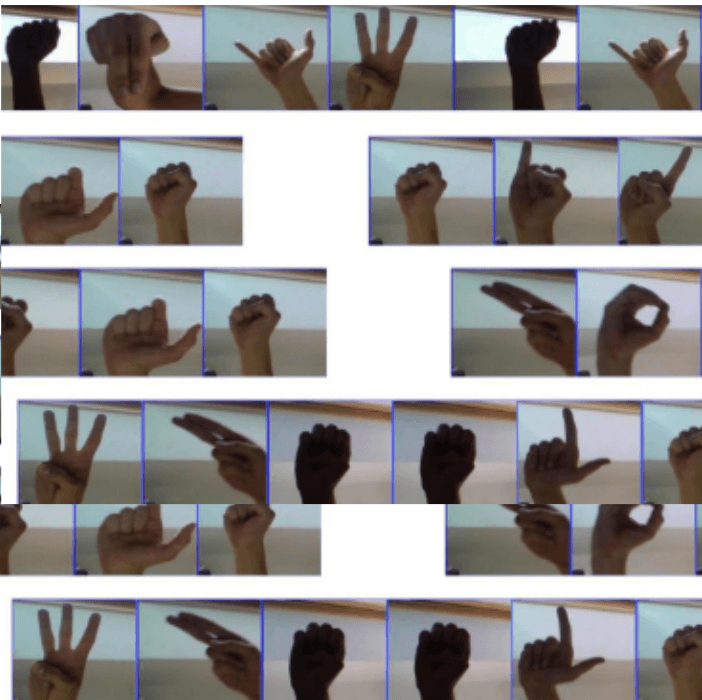

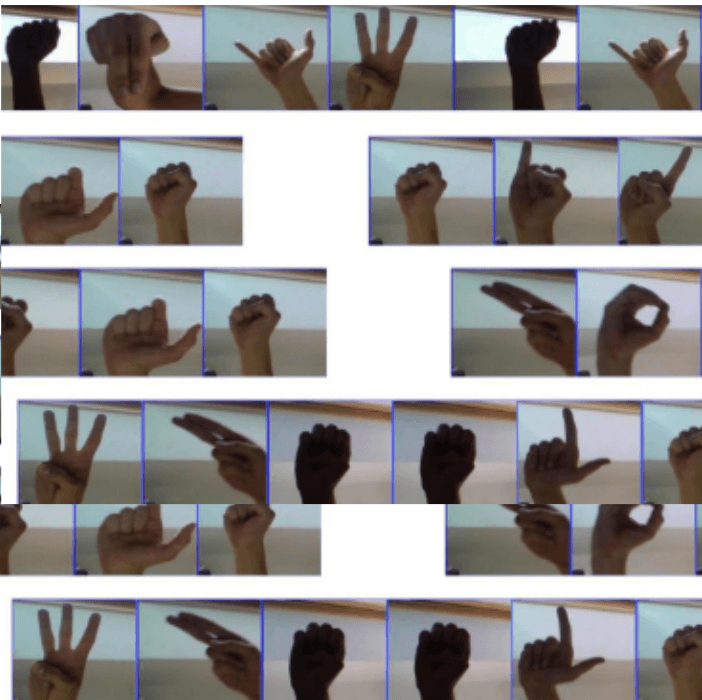

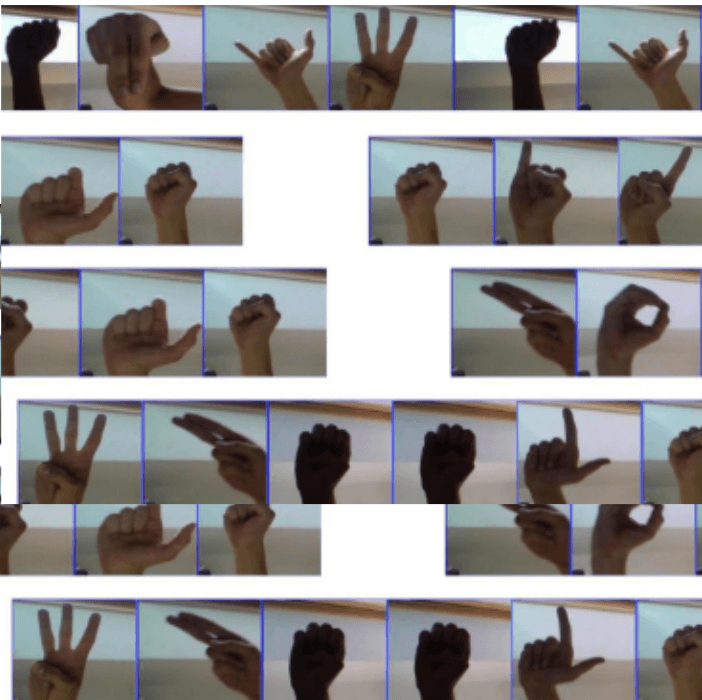

Converting Youtube Video to American Sign Language Translation Using Convolution Neural Network and Video Processing

Sign language is a vital mode of communication for the deaf, comprising around 5% of the global population. Despite the availability of subtitles in various languages, YouTube lacks sign language-based subtitles. This study aims to develop American Sign Language (ASL) subtitles for YouTube videos. The proposed method involves a three-phase framework integrating deep learning-based Convolutional Neural Network (CNN) and image/video processing techniques. A torch-based CNN model achieved high accuracy in training and testing (99.982% and 98% respectively). The study demonstrates the practical application of the integrated method by extracting a random YouTube video.

Converting Youtube Video to American Sign Language Translation Using Convolution Neural Network and Video Processing

Sign language is a vital mode of communication for the deaf, comprising around 5% of the global population. Despite the availability of subtitles in various languages, YouTube lacks sign language-based subtitles. This study aims to develop American Sign Language (ASL) subtitles for YouTube videos. The proposed method involves a three-phase framework integrating deep learning-based Convolutional Neural Network (CNN) and image/video processing techniques. A torch-based CNN model achieved high accuracy in training and testing (99.982% and 98% respectively). The study demonstrates the practical application of the integrated method by extracting a random YouTube video.

Converting Youtube Video to American Sign Language Translation Using Convolution Neural Network and Video Processing

Sign language is a vital mode of communication for the deaf, comprising around 5% of the global population. Despite the availability of subtitles in various languages, YouTube lacks sign language-based subtitles. This study aims to develop American Sign Language (ASL) subtitles for YouTube videos. The proposed method involves a three-phase framework integrating deep learning-based Convolutional Neural Network (CNN) and image/video processing techniques. A torch-based CNN model achieved high accuracy in training and testing (99.982% and 98% respectively). The study demonstrates the practical application of the integrated method by extracting a random YouTube video.

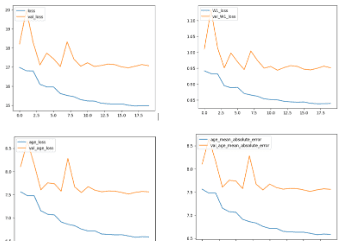

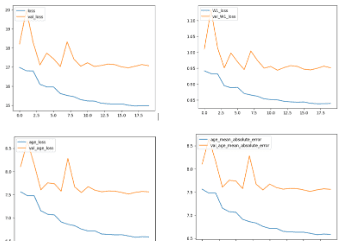

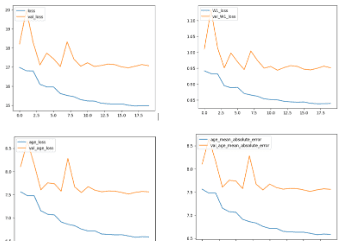

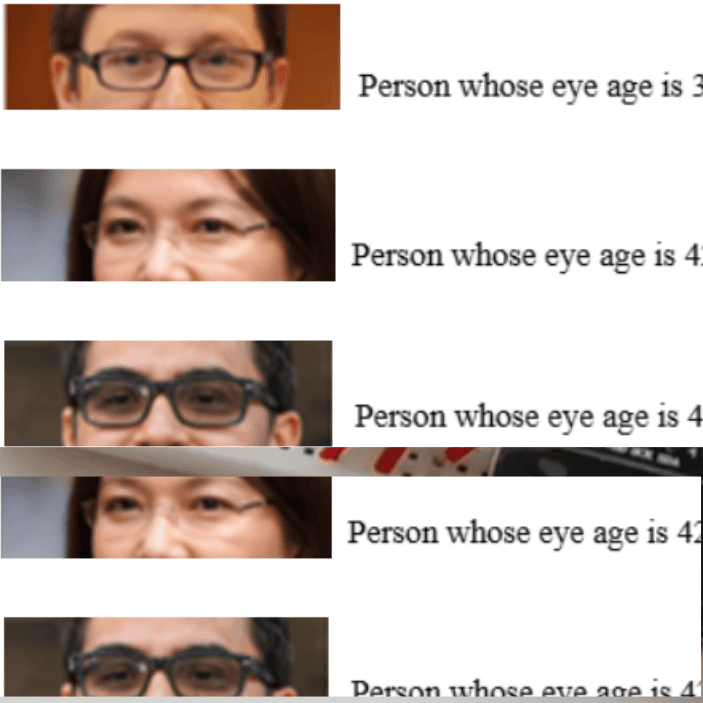

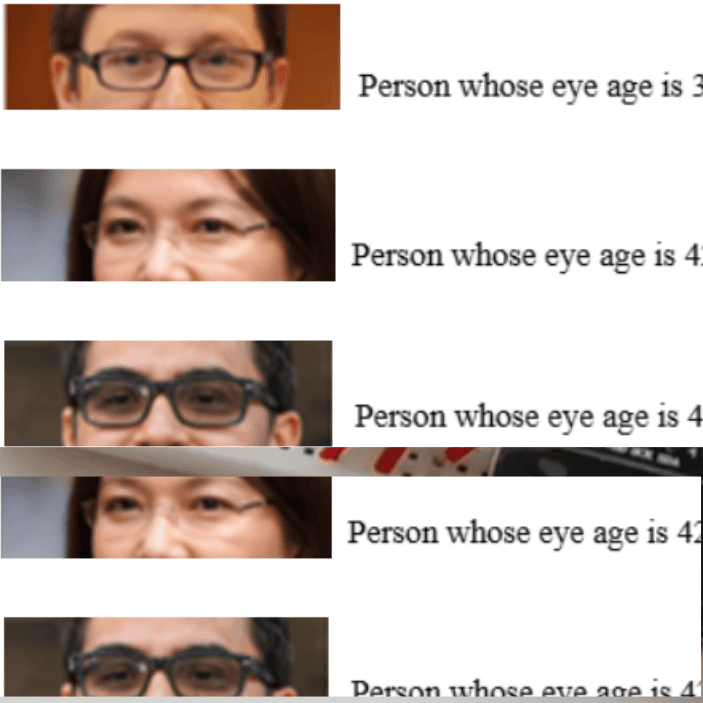

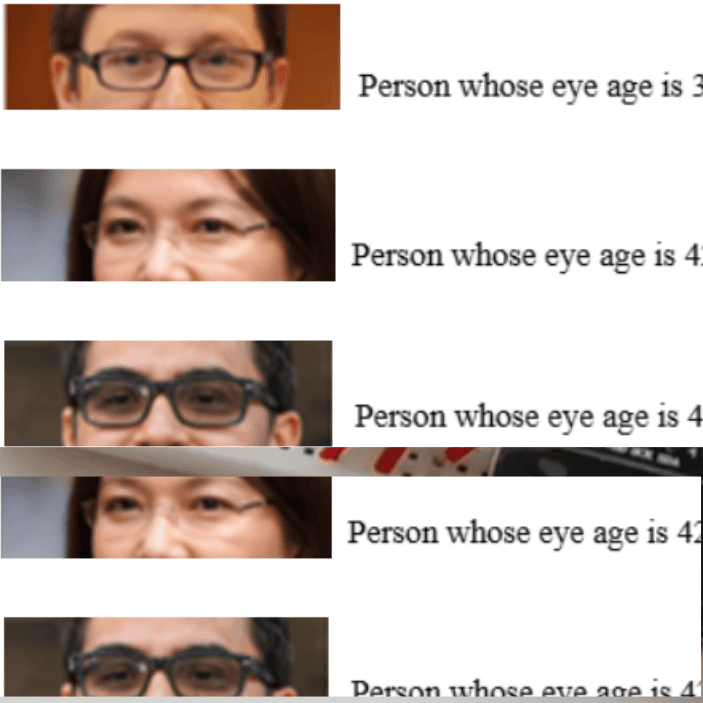

Ocular Age Estimation

A wide range of applications, including biometrics and medical diagnostics, are finding growing value in accurate eye age assessment. This is a thorough examination of the creation of a model for estimating the age of the eye that uses convolutional neural networks (CNNs) to attain a remarkable accuracy rate of 90%. CNNs, known for their skill in image processing, are essential to this approach since they allow for a planned and methodical workflow. Beginning with the collection of a large dataset of eye photographs, the process places an emphasis on the diversity of age groups and eye disorders. It enables businesses to focus their goods and services on certain age groups, improving user experiences, by precisely assessing eye age. The effective integration of CNNs in this model highlights their ability to recognise complex ageing-related patterns, reiterating their status as a strong tool for challenging image-processing tasks.

Ocular Age Estimation

A wide range of applications, including biometrics and medical diagnostics, are finding growing value in accurate eye age assessment. This is a thorough examination of the creation of a model for estimating the age of the eye that uses convolutional neural networks (CNNs) to attain a remarkable accuracy rate of 90%. CNNs, known for their skill in image processing, are essential to this approach since they allow for a planned and methodical workflow. Beginning with the collection of a large dataset of eye photographs, the process places an emphasis on the diversity of age groups and eye disorders. It enables businesses to focus their goods and services on certain age groups, improving user experiences, by precisely assessing eye age. The effective integration of CNNs in this model highlights their ability to recognise complex ageing-related patterns, reiterating their status as a strong tool for challenging image-processing tasks.

Ocular Age Estimation

A wide range of applications, including biometrics and medical diagnostics, are finding growing value in accurate eye age assessment. This is a thorough examination of the creation of a model for estimating the age of the eye that uses convolutional neural networks (CNNs) to attain a remarkable accuracy rate of 90%. CNNs, known for their skill in image processing, are essential to this approach since they allow for a planned and methodical workflow. Beginning with the collection of a large dataset of eye photographs, the process places an emphasis on the diversity of age groups and eye disorders. It enables businesses to focus their goods and services on certain age groups, improving user experiences, by precisely assessing eye age. The effective integration of CNNs in this model highlights their ability to recognise complex ageing-related patterns, reiterating their status as a strong tool for challenging image-processing tasks.

LSTM Based Sign Language Detection System

Sign language recognition plays a crucial role in facilitating communication and inclusivity for individuals with hearing impairments. This research paper shows a novel method for detecting sign language gestures using Long Short-Term Memory (LSTM) networks. By leveraging the sequential data processing capabilities of LSTM networks and with the use of feature engineering an accurate model has been developed to predict sign language gestures. The paper extends in-depth discussions into data collection, data pre-processing and feature engineering techniques used to increase the efficiency of the LSTM model. Keras API for TensorFlow was used for creating a sequential model. The paper also presents a comparative study regarding the change in accuracy resulting from the change in the size of the LSTM layer and the dropout layer ratio. The highest-performing model with an accuracy of 91.28% was used for testing the performance of the model in real-life applications.

LSTM Based Sign Language Detection System

Sign language recognition plays a crucial role in facilitating communication and inclusivity for individuals with hearing impairments. This research paper shows a novel method for detecting sign language gestures using Long Short-Term Memory (LSTM) networks. By leveraging the sequential data processing capabilities of LSTM networks and with the use of feature engineering an accurate model has been developed to predict sign language gestures. The paper extends in-depth discussions into data collection, data pre-processing and feature engineering techniques used to increase the efficiency of the LSTM model. Keras API for TensorFlow was used for creating a sequential model. The paper also presents a comparative study regarding the change in accuracy resulting from the change in the size of the LSTM layer and the dropout layer ratio. The highest-performing model with an accuracy of 91.28% was used for testing the performance of the model in real-life applications.

LSTM Based Sign Language Detection System

Sign language recognition plays a crucial role in facilitating communication and inclusivity for individuals with hearing impairments. This research paper shows a novel method for detecting sign language gestures using Long Short-Term Memory (LSTM) networks. By leveraging the sequential data processing capabilities of LSTM networks and with the use of feature engineering an accurate model has been developed to predict sign language gestures. The paper extends in-depth discussions into data collection, data pre-processing and feature engineering techniques used to increase the efficiency of the LSTM model. Keras API for TensorFlow was used for creating a sequential model. The paper also presents a comparative study regarding the change in accuracy resulting from the change in the size of the LSTM layer and the dropout layer ratio. The highest-performing model with an accuracy of 91.28% was used for testing the performance of the model in real-life applications.

Find your nearest innovation lab

These awards reflect projects that pushed my boundaries, told deeper stories, and caught the attention of people who care about what visuals can say.

Find your nearest innovation lab

These awards reflect projects that pushed my boundaries, told deeper stories, and caught the attention of people who care about what visuals can say.